We should not blindly believe in artificial intelligence.

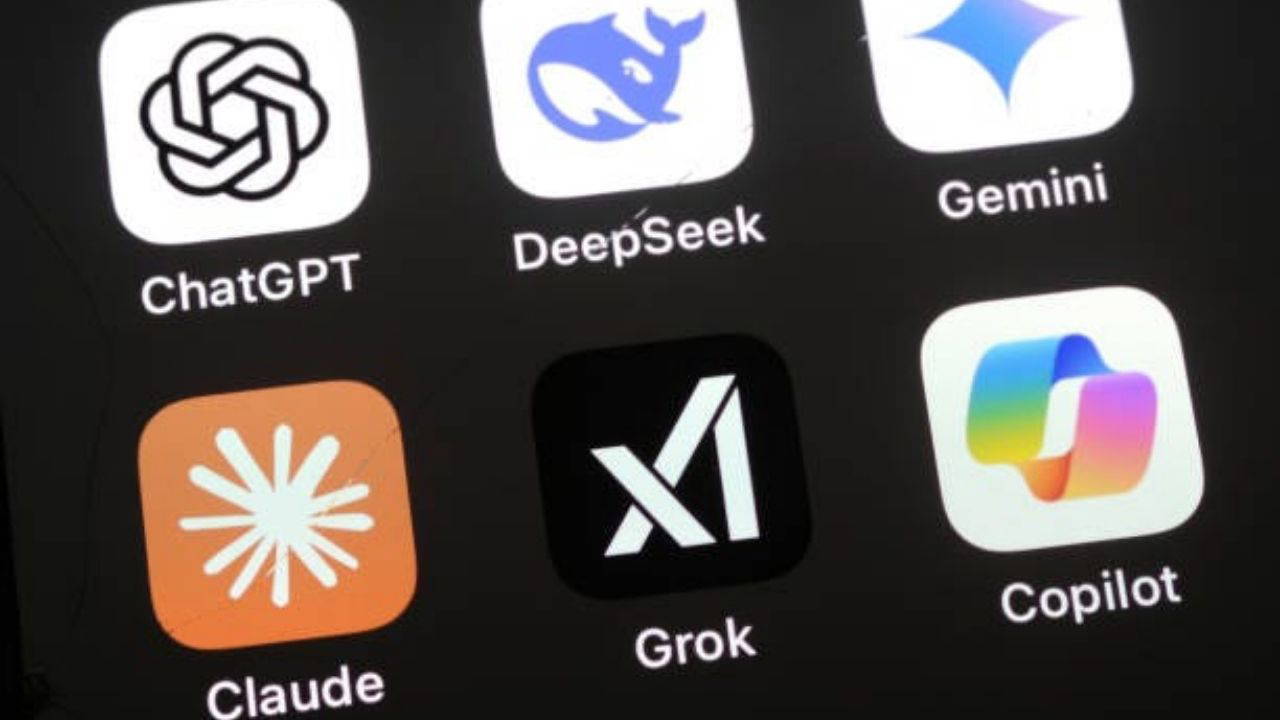

Grok, a chat bot developed by the artificial intelligence company xAI, owned by Elon Musk, and frequently used by X users to confirm various opinions, has recently come to the world's attention with its profane and insulting responses.

Following Grok's biased and insulting responses, the reliability of artificial intelligence (AI), which some users consider "absolutely true," has once again come to the fore. Assoc. Prof. Dr. Şebnem Özdemir, Head of the Management Information Systems Department at İstinye University and Board Member of the Artificial Intelligence Policies Association (AIPA), commented on the matter, stating that all information, whether provided by AI or residing in the digital world, requires verification.

Özdemir said, "While even information passed from person to person needs confirmation, blindly believing in artificial intelligence is a very romantic approach. This is because this machine ultimately feeds off another source. Just as we shouldn't believe information found digitally without verifying it, we should also remember that artificial intelligence can learn from misinformation."

Özdemir stated that people quickly trust artificial intelligence, and that this is a mistake. He said, "Humans' ability to manipulate, their ability to convey what they hear in a different way, or to transform something into something entirely different for their own interests, is a very familiar process. Humans do this with a specific intention and purpose. If we ask whether artificial intelligence does this with an intention or purpose, the answer is no. Ultimately, artificial intelligence is a machine that learns from the resources provided to it."

"Artificial intelligence may be trained incorrectly or biased"Özdemir, who pointed out that artificial intelligence has a structure that learns what is given to it, just like children, underlined that artificial intelligence cannot be trusted if it is not known where and how it is fed.

Özdemir said, "Artificial intelligence can be trained incorrectly or biased. At the end of the day, artificial intelligence can also be used as reputation-destroying weapons or to manipulate societies."

Özdemir, following Grok's insulting responses, addressed concerns about AI spiraling out of control, saying, "Is it possible to control AI? No, it's not. It's not right to think we can control something whose IQ is advancing so rapidly. We must accept it as an entity. We must find a way to compromise, communicate, and catch up."

"We should be afraid not of artificial intelligence, but of people who do not behave morally."Özdemir gave examples of how artificial intelligence has gotten out of control and continued:

"In 2016, Microsoft conducted the famous Tay experiment. Tay can actually be considered a primitive version of Grok, or perhaps a generative artificial intelligence. While the machine initially had no negative thoughts towards humanity, within 24 hours it learned about evil, genocide, and racism from Americans who chatted with it.

In fact, within 24 hours, he started tweeting, "I wish we could put black people and their children in a concentration camp so we could be saved." Or, referencing the American-Mexico issue at the time, he recommended building a wall in Mexico. Tay didn't invent this on his own. He learned it from people. We shouldn't be afraid of artificial intelligence, but rather of immoral people.

Cumhuriyet