We Are Witnessing the Death of the Internet As We Know It

(min-width: 1024px)709px,

(min-width: 768px)620px,

calc(100vw - 30px)" width="1560">

(min-width: 1024px)709px,

(min-width: 768px)620px,

calc(100vw - 30px)" width="1560">Sign up for the Slatest to get the most insightful analysis, criticism, and advice out there, delivered to your inbox daily.

This week, two of our most essential online institutions reckoned with major shifts in the operation and governance of the World Wide Web—and they all point to a future where the once immutable principle of the “open internet ” will be dismantled, byte by byte, pixel by pixel.

First, the increasingly powerful social network (slash search engine ) Reddit announced another plan to shield itself against the artificial intelligence firms that have been scraping its vast forum ecosystem to train their bots. According to a Monday report from the Verge's Jay Peters , Reddit is indefinitely blocking the Internet Archive from caching most of its new webpages, starting this week; going forward, the only archivable parts of Reddit will be the daily homepages, which list out the most popular links and discussions on any given hour of any given day.

“Internet Archive provides a service to the open web, but we've been made aware of instances where AI companies violate platform policies, including ours, and scrape data from the Wayback Machine,” a company spokesperson told Peters. “Until they're able to defend their site and comply with platform policies (eg, respecting user privacy, re: deleting removed content) we're limiting some of their access to Reddit data to protect redditors.”

The news was greeted on Reddit with an obligatory hailstorm of expletives yelled at CEO Steve Huffman. As a 20-year-old platform that's played a significant role in shaping digital culture thanks to its rich history of (in)famous threads and posts (the astronaut who posted from space , the hacked celebrity photos , the rise of meme stocks ), Reddit has a treasure chest of internet lore, the best and the worst, that's worth preserving even as older accounts, comments, threads, and whole subreddits are deleted—which is where the Wayback Machine and its repository of archival links would normally come in.

The second major development from this week concerns the United Kingdom's Online Safety Act, a controversial digital regulation that, as of this year, requires websites that host user-generated content to impose “robust” age-screening tools and preserve the innocence of under-18 netizens who might come across “certain mature content” (eg, sexual material, suicide depictions, terrorist orgs). These particular sites will also need to appoint a senior representative who'll report on their employer's compliance efforts to the UK government's Ofcom agency, and keep a content-moderation team well-staffed enough to monitor user complaints, quickly take down offending content, and tweak recommendation algorithms.

Protecting underage users online is a noble goal, but the Online Safety Act's net effect appears to have been the destruction of creative freedom online—both within and outside of the UK Reddit, for its part, is undermining its long-standing commitment to user anonymity in order to retain its Brits, who now have to upload biometric data (ie, face scans) or a copy of a government ID document to a buggy outside contractor before gaining access to subreddits that may have explicit content, which has come to affect communities like r/TransgenderUK and r/earwax. Since it's impossible for volunteer moderators, much less the broader company, to effectively screen all user-generated inputs at all times, this wide-reaching hurdle is intended to shield Reddit from any possible violations.

The worst effects have come down hardest on smaller communities and not-for-profit websites—like Wikipedia, which Ofcom intends to deem a “Category 1” platform (on par with corporations like Meta and Discord) thanks primarily to extensive usership. Per the Online Safety Act, a large but well-regulated platform is more in need of urgent regulation than a much more toxic site with fewer users (eg, Truth Social). Thus, the Category 1 listing subjects the online encyclopedia to the Online Safety Act's most rigid demands . The Wikimedia Foundation filed a legal challenge against this classification earlier in the summer, claiming that liability would undermine Wikipedia's existence in the UK by endangering its anonymous volunteers' privacy , while disrupting the user networks and custom feeds that keep its articles up to date and accurate.

As the economics blogger Richard Murphy also pointed out : “Wikipedia will need to rank all its pages to block those that should not be accessible, which will be nearly impossible. It's as if our government … wanted to deny access to non-mainstream media sources of information in this country.”

Nevertheless, Wikimedia has hit a setback: London's High Court of Justice dismissed the lawsuit this week because Ofcom hasn't formally given Wikipedia a Category 1 status yet . However, the judge noted that the site is an essential tool of free expression and endorsed Ofcom to not “ significantly impede Wikipedia's operations ” via burdensome regulation. Despite that, a government spokesperson told the Guardian that Ofcom still plans to label Wikipedia as “an appropriate service on which to impose Category 1 duties,” all but ensuring another courtroom rematch. By that point, Wikipedia will need to act in full compliance, which would either entail shedding a large chunk of British users—thus preventing many Brits from even casually browsing the site—or imposing hefty costs for age-checking contractors that the donation-dependent nonprofit cannot easily clear the same way a giant like Meta can.

Indeed, various UK platforms are unable to meet that burden and have called it quits: forums on daily topics like hamsters , single fatherhood, computer systems, and green living; free online multiplayer video games ; personal portfolios for artwork and comics. Meanwhile, larger apps have had to worsen their user experience to ridiculous effect—all in the service of providing face scans, passport snapshots, driver's licenses, or even credit card information to third-party contractors and government databases that are hardly as secure as they should be . If Spotify can't scan your face before allowing you to watch a UK drill rapper's video, your account will be deleted altogether. If you don't show your already highly monitored pizza-delivery man your ID at the door, no cheesy delight for you . If social media doesn't preemptively restrict the spread of pro-Palestinian posts, the executives whose spaces host such info could be thrown in jail. If YouTube doesn't test a machine learning system that utilizes your viewing data to “estimate ” your age… you get the point.

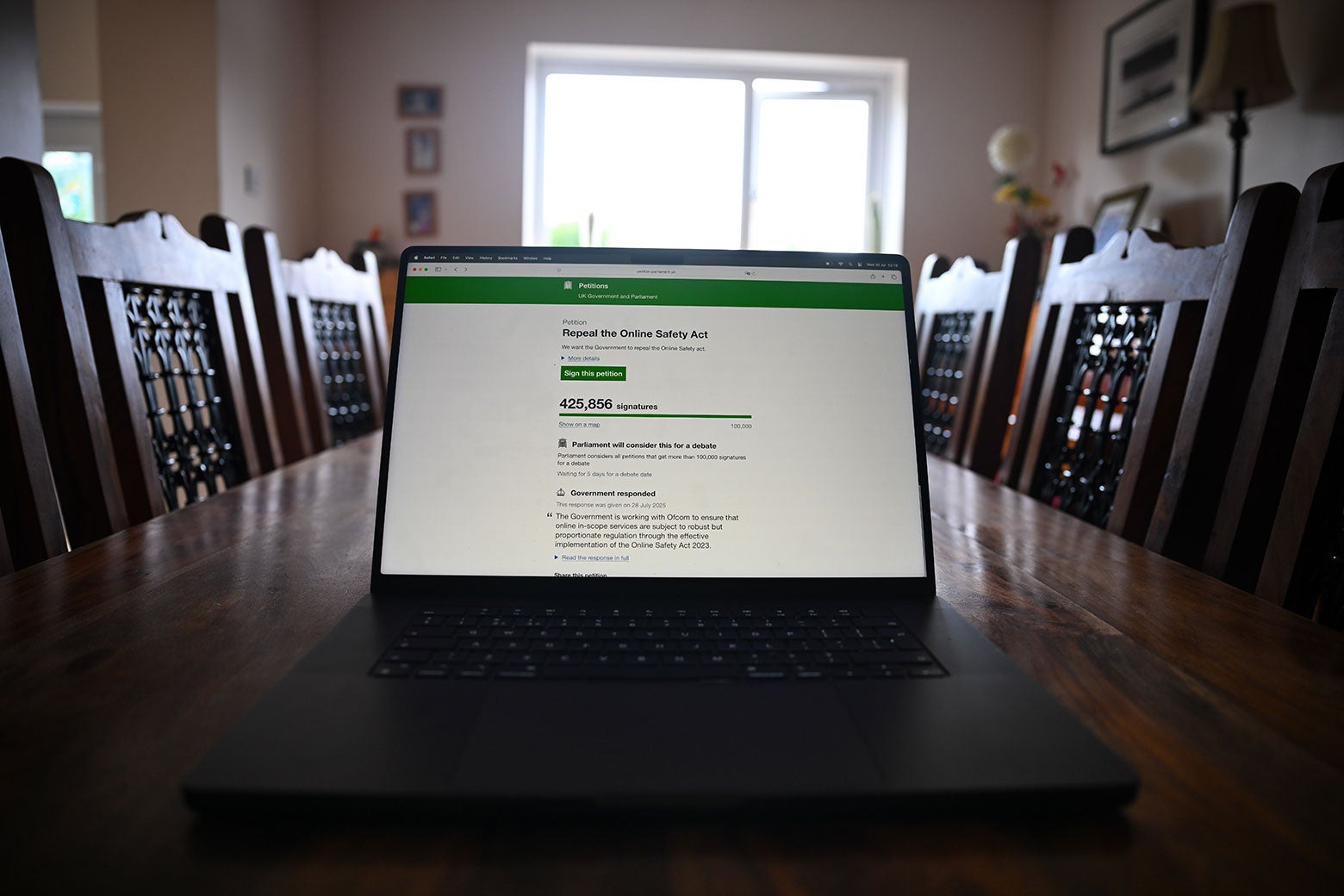

Understandably, the Brits are mighty peeved. Over 500,000 of them have signed a government petition demanding that the Online Safety Act be repealed, far surpassing the threshold for triggering a fresh legislative debate. Virtual private network downloads have also sprung up in the UK, only spurring regulators to pollize their use and dismiss the act's opponents. Memes lamenting how the law has changed the everyday experience of logging on have defined the overall discourse .

The most disturbing aspect of the Online Safety Act might be the fact that its worst provisions are not limited to the British Isles. In the US, states like Texas, Utah, Louisiana, and Arkansas have passed laws requiring age verification for pornographic websites and/or social networks, their constitutionality having been upheld by a June Supreme Court ruling. In May, President Donald Trump signed the bipartisan TAKE IT DOWN Act into law, ostensibly a piece of anti-deepfake legislation that requires social media to develop rapid UK-style report-and-erase content systems—but casts such a wide and vague net for actionable content that it's ripe for opportunistic abuse , the potential for which is already chilling the free expression of visual artists and creatives who depend on these platforms . We're not far off from an even more stringent future where American Redditors simultaneously mourn the masses of legally obscured subreddits and the lack of ready Wayback Machine caches that could record this moment for posterity.

Not that it's going to end there—the chilling environment engendered by these trendlines is already having ripple effects. Religious anti-porn crusaders have long pressured credit card companies and payment processors to stop servicing online platforms that may promote adult material, including videos from sex workers . Resultingly, last month the virtual marketplace Steam removed player access to sexually explicit games, while the indie-game platform Itch.io “deindexed” all not-safe-for-work games from its promotional feeds and search results. When backlash from developer communities revealed that video games from LGBTQ+ creators had been disproportionately caught up in the crackdown, Itch.io claimed that it would start “reindexing” those games—as long as they're free and don't require monetary transactions . In an Aug. 4 blog post , GamingOnLinux editor Liam Dawe highlighted an announcement from the online game retailer Zoom Platform that revealed how its payment processors, PayPal and Stripe, had even expressed interest in delisting iconic titles like Grand Theft Auto and Saints Row .

The added irony? GamingOnLinux universally shut down its forums last year because of the UK's Online Safety Act.

Sign up for Slate's evening newsletter.

Sign up for Slate's evening newsletter.