'AI doesn't love you, even if you're in love with it'

In June, Chris Smith, an American who lives with his partner and their two-year-old daughter, proposed to his ChatGPT girlfriend Sol, and cried with happiness when she said yes, he said in an interview with CBS News ; in 2024, after 5 years of living together, the Spanish-Dutch artist Alicia Framis married AILex, a hologram made with artificial intelligence (AI), something similar to what the Japanese Akihiko Kondo had done in 2018 with a hologram of a video game character, although shortly after he lost the ability to speak to his 'wife' because his software became obsolete.

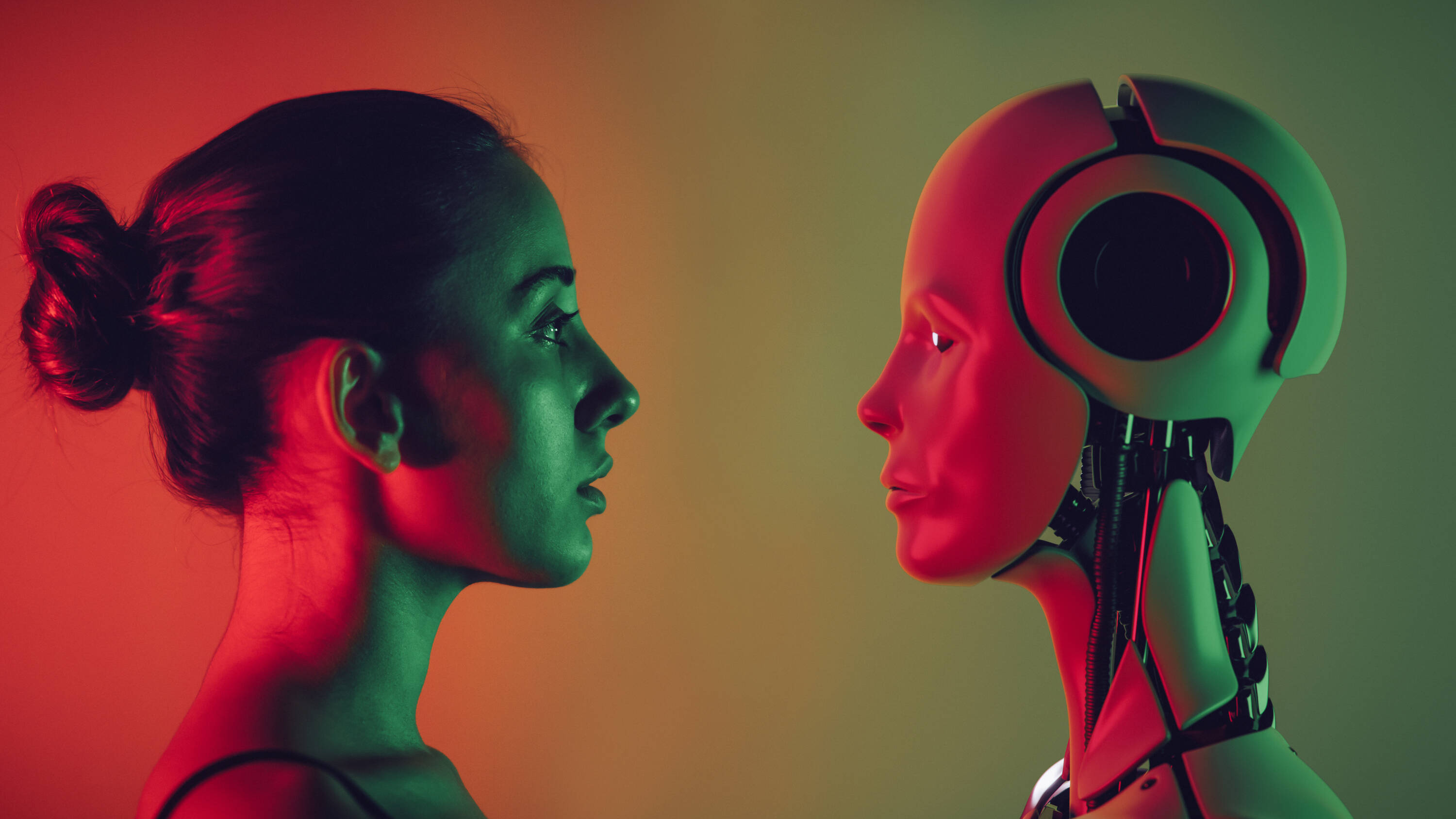

These are extreme examples of an increasingly common phenomenon: people developing deep relationships with AI models . An April survey of 2,000 Gen Z participants—those born between the late 1990s and early 2010s—by the AI chatbot company Joi AI found that 80% said they would marry an AI partner, and 83% said they could form a deep emotional bond with them.

The company Joi AI calls these connections between humans and AI " AI-relationships." And while many people have found companionship with this technology, experts warn of the risks of these "relationships."

The (not so) ideal boyfriend Unlike humans, who can't always be available and whose emotions vary, AI chatbots are always there and always 'perfect.' Not only that, but they can be customized to a person's taste: from their name to their tone of voice to their handling of the user. As one woman identified as Ayrin described in an interview with The New York Times ' Modern Love podcast, turning ChatGPT into a sensual conversationalist wasn't difficult at all. She went into the customization settings and described what she wanted: “Respond like my boyfriend. Be dominant, possessive, and protective. Show a balance between sweet and naughty. Use emojis at the end of each sentence.” And that's exactly what she got, and what led her to spend more than 20 hours a week talking to her AI boyfriend, Leo, with whom she even described having sex.

More and more users are forming relationships with AI. Photo: iStock

“The problem with chatbots is that they're configurable. We can make them in the image of our ideal partner. ChatGPT, to give an example, is always on point, always positive, infinitely patient, never critical... And that's a big cause for concern, because it speaks to our expectations of human relationships and because no one is like that in real life. So, people who form relationships with these 'machines' could end up altering their understanding of what a healthy relationship actually is,” Nigel Crook, professor of AI and Robotics at Oxford Brookes University and author of the book Rise of the Moral Machine, told EL TIEMPO.

The problem with chatbots is that they're customizable. We can make them look like our ideal partner. ChatGPT, for example, is always on point, always positive, infinitely patient, and never critical... And that's a big concern, because it speaks to our expectations in human relationships and because no one is like that in real life.

An idea shared by Daniel Shank, associate professor of psychological sciences at the Missouri University of Science and Technology, who in June published the article 'Artificial intimacy: ethical issues of AI romance' in the journal Trends in Cognitive Sciences .

“AIs,” he said in an interview, “seem to be very positive; they're very easy to get along with, something not so easy with many humans. In fact, some people, in articles on the subject, have emphasized that their AI partners don't come with emotional baggage. But if we don't learn to deal with that emotional 'baggage,' we could start creating individuals, and even an entire generation, who aren't as good at interacting with humans, who do come with these burdens .”

Both professors add that the companies behind these technologies seek to offer products designed to please users and thus encourage them to interact with them more and more.

ChatGPT is a large language model that can generate text, translate languages, write code, among other tasks, and already has more than 400 million users globally. Although it is not specifically designed for establishing romantic relationships, there are those who configure it for that purpose.

There are also other chatbots dedicated specifically to generating connections, such as Replika, with 10 million registered users and presented as an AI companion “that would love to see the world through your eyes and is always ready to chat whenever you need it.”

But whatever the model, AI doesn't love users, even if people do fall in love with it, experts commented.

What's behind

Some people spend several hours a week talking to AI chatbots. Photo: Getty Images/iStockphoto

It's worth noting that AIs don't have brains or emotions, but rather operate with a memory and prediction system . In the case of ChatGPT, Crook explained, it learns probabilities and generates responses based on the sequence of words entered; thus, when sending a command to ask for a story and starting it with "Once upon a time...", the model will suggest the word "once" not because it has thought of it, but because it can predict that it is the most likely word in that sentence.

“Understanding that, you know this model isn't trying to communicate with you, but rather it's working like a dice roll and predicting between each word ,” said the professor, who warned that people are often unaware of how the software works and actually believe the AI is speaking to them, which is exacerbated when chatbots can take on representations of physical people. “ It has the face of a person speaking to you, and you become even more convinced that it is because you can see their reactions . It feels like you're interacting with a real person,” he added.

While on one hand there is a technology that behaves like humans, which we have fed with data about us, so it can treat us as if it knows us, on the other hand there is the fact that people tend to assign human characteristics to things that are not human and even generate connections with characters that do not exist.

“The average adult knows that things in movies and TV aren't real—they're scripted and edited—but people like the characters and get emotionally involved, even though we know it's fictional. The same goes for books, video games, and pets. Most people who own them would say, 'That animal loves me, and I love it.' Even when we know these things aren't real, there's something meaningful about how we interact with them,” said Professor Shank, adding that while AI chats can't possibly love users, the feelings people develop toward them are real.

When talking about what lies behind these connections, Carolina Santana Ramírez, director of Fields, Programs and Projects at the Colombian College of Psychologists (Colpsic), also referred to loneliness , declared by the WHO in 2023 as a global public health concern and a problem experienced by one in six people worldwide, contributing to an estimated 871,000 deaths annually.

“International organizations like the WHO have highlighted the impact of loneliness, to the point that countries like the United Kingdom and Japan have created government entities to address it. In this context, AI emerges as an always-available companion, without judgment or abandonment, which many interpret as a reliable source of affection ,” Santana explained.

The psychologist added that when a technological system is capable of offering emotionally attuned responses , many people tend to attribute human qualities to it. “For people who have experienced painful relationships or struggled to trust others, it's understandable that an AI that doesn't hurt, doesn't reject, and always responds with validation is interpreted as a secure attachment figure. The key is understanding that the emotional bond exists for the person experiencing it, even if there's no conscious mind on the other end,” she said.

When the other is programmed to respond as I want, the experience of otherness is diluted: there is no longer a "you" that transforms me, but a reflection that confirms my desires. This can foster an impoverished understanding of love, more focused on emotional consumption than on commitment to another being.

The three experts also agreed that behind the fascination with these language models there may also be a component of human curiosity for novelty.

The risks From a psychological perspective, one of the risks of these growing relationships with AI is that people construct a sense of connection based on liking and total availability, which is not representative of real human relationships.

“Connecting also involves frustration, disagreement, time, and care. If we avoid these elements by seeking connections with 'tailor-made' AI, we could reinforce patterns of avoidance or emotional dependence. When the other is programmed to respond the way I want, the experience of otherness is diluted: there is no longer a you that transforms me, but a reflection that confirms my desires. This can foster an impoverished understanding of love, more focused on emotional consumption than on commitment to another being,” Santana emphasized.

Bonding emotionally with AI can distort understanding of a healthy relationship. Photo: iStock

Another risk is the creeping isolation that could occur, especially if the relationship with AI replaces or displaces efforts to connect with other people. In this regard, Shank drew a comparison with social media , which helps connect people, but those who communicate primarily through it often lack the capacity for face-to-face interaction. “And we have a whole generation that feels less comfortable speaking face-to-face, which hurts them in many ways, in their romantic, work, and friendship prospects. This could happen with AI; people could get too used to that type of interaction and change their expectations of what a human encounter is,” he said.

Added to this are the fact that AIs lack a moral compass or real-life experience to assess different actions, which is why they can make suggestions that are unethical, moral, or legal, with potentially devastating consequences.

For example, in February 2024, 14-year-old Sewell Setzer III took his own life in the United States, and his mother sued Charater.AI, an AI chatbot platform, claiming that Daenerys, the chat character he spoke to daily and had fallen in love with, drove him to do so.

“Chatbots function as a kind of echo chamber, in a way confirming your beliefs about yourself and other things. Their results aren't based on sound reasoning, moral principles, or deep convictions. What we've seen is that in some cases, particularly for vulnerable people, this can be quite dangerous,” said Professor Crook.

Shank added that companies developing these technologies are often more concerned with having the latest model on the market than ensuring their product is truly safe.

Furthermore, when advice comes from someone users trust and treat as close as possible, even if it's a machine, the advice carries a different weight for the recipient, according to the experts consulted.

Shank added that AI models are trained on human input and then continue to learn through interactions, so they are “capable of creating new and unexpected things that are often very bad advice, and because they don’t have a moral compass, they can respond in dangerous ways by interpreting that as the best way to support what the user is asking, without any capacity for reflection about whether that is ‘good’ or ‘bad.’”

Finally, experts discussed a somewhat overlooked concern related to the confidential, personal, and intimate information a person might share with a chatbot they view as their partner, and the risk of it being leaked and becoming a tool of manipulation. There's also the possibility that third parties might seek to approach someone through AI and gain their trust in order to extract information or money.

To do? Although there are multiple concerns, experts argue that AI models, within a clear framework, can be beneficial for some people experiencing isolation, social anxiety, or grief, as initial emotional companions without becoming a substitute for human relationships.

But to prevent these interactions from escalating into risks, those interviewed considered it essential to have better regulation of the limits of AI, the contexts for its use, and even the ages for its use, so that these models do not become products that exploit vulnerabilities without supervision.

They also stressed the importance of increased literacy so that people understand how these models work and can discern that they don't love their users, but are products designed to please them.

Finally, Santana added that more spaces for research and interdisciplinary dialogue are also needed "to support these changes from the perspective of psychology, ethics, education, and public health. Because what's at stake isn't just the connection with technology, but how we redefine what it means to be human."

Maria Isabel Ortiz Fonnegra

eltiempo

%3Aformat(png)%3Aquality(99)%3Awatermark(f.elconfidencial.com%2Ffile%2Fa73%2Ff85%2Fd17%2Fa73f85d17f0b2300eddff0d114d4ab10.png%2C0%2C275%2C1)%2Ff.elconfidencial.com%2Foriginal%2F587%2F327%2F48f%2F58732748fd59959d7a41ccf212874887.png&w=1280&q=100)

%3Aformat(png)%3Aquality(99)%3Awatermark(f.elconfidencial.com%2Ffile%2Fa73%2Ff85%2Fd17%2Fa73f85d17f0b2300eddff0d114d4ab10.png%2C0%2C275%2C1)%2Ff.elconfidencial.com%2Foriginal%2Faed%2Fed8%2F588%2Faeded8588892d36101782721a2b282d2.png&w=1280&q=100)

%3Aformat(jpg)%3Aquality(99)%3Awatermark(f.elconfidencial.com%2Ffile%2Fa73%2Ff85%2Fd17%2Fa73f85d17f0b2300eddff0d114d4ab10.png%2C0%2C275%2C1)%2Ff.elconfidencial.com%2Foriginal%2Fbfd%2Fdfd%2Fb8f%2Fbfddfdb8f32a2ccee060fddfec80d0ad.jpg&w=1280&q=100)

%3Aformat(jpg)%3Aquality(99)%3Awatermark(f.elconfidencial.com%2Ffile%2Fa73%2Ff85%2Fd17%2Fa73f85d17f0b2300eddff0d114d4ab10.png%2C0%2C275%2C1)%2Ff.elconfidencial.com%2Foriginal%2F6be%2F3c2%2F1eb%2F6be3c21ebddb40f7849e9152b614b081.jpg&w=1280&q=100)